AWS SageMaker Neo is an Amazon Web Services (AWS) service that optimizes machine learning models for edge devices. This is beneficial because it compiles, quantizes, and optimizes models for efficient execution on specific hardware, reducing both latency and power consumption. So, developers can leverage popular deep learning frameworks like TensorFlow and PyTorch, which are supported by SageMaker Neo. This makes it easy to deploy optimized models on edge devices such as BrainyPi. Consequently, developers can harness the power of machine learning for real-time edge computing applications. As a result, efficient execution of models on edge devices becomes possible, thereby bringing machine learning capabilities to the edge. Lets explore AWS SageMaker on Brainy Pi !

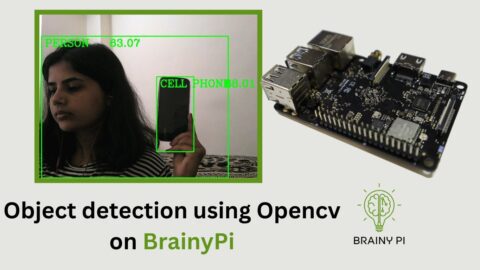

BrainyPi, a popular single-board computer designed for industrial AI and machine learning applications, is the perfect platform for harnessing the power of machine learning on the edge. In this blog post, we will walk you through the process of performing inference on an AWS SageMaker. By doing so, you will be able to bring the capabilities of machine learning to your edge devices. This is a significant advantage because it allows you to leverage the full potential of BrainyPi and explore new possibilities for real-time AI applications. Additionally, the integration of AWS SageMaker with BrainyPi ensures seamless and efficient execution of machine learning models, enabling you to unlock innovative solutions in various industries.

Prerequisites:

Before we dive into the details, make sure you have the following prerequisites in place:

-

An AWS SageMaker trained machine learning model: You should have a trained machine learning model using AWS SageMaker. This model should be saved in a format compatible with BrainyPi, such as Kera, TensorFlow, TFlite, PyTorch or ONNX/MXNet. See the links below

For example purposes in this blog we will be using a per-trained object detection model

coco_ssd_mobilenet_v1_1.0_quant_2018_06_29trained on the coco dataset. -

A BrainyPi board: You should have a BrainyPi board, with Rbian OS installed. You can refer to the BrainyPi documentation for instructions on setting up your board.

-

AWS account: You should have AWS account.

Setup AWS Credentials

-

Create an Administrator User for the tutorial

-

Go to AWS console, Search for IAM.

-

Go to Users , Click on Add user

-

In “Set Permissions”, Choose option “Attach policies directly”

-

Search for the “AdministratorAccess” policy, select the “AdministratorAccess” policy

-

Create user

-

-

Generate Access Keys for the user

-

The CSV file contains 2 credentials

-

Access Key ID

-

Access Key Secret

-

-

Open up a termail on BrainyPi, Run the commands

mkdir -p ~/.aws/ && touch ~/.aws/credentials cat << EOF > ~/.aws/credentials [default] aws_access_key_id = YOUR_ACCESS_KEY aws_secret_access_key = YOUR_SECRET_KEY EOF

This will setup AWS credentials on your Brainy Pi.

Compile model for BrainyPi using AWS Sagemaker

As an example, we will compile the coco_ssd_mobilenet_v1_1.0_quant_2018_06_29 object detection model for BrainyPi, this is a TFlite model.

-

Install dependencies

sudo apt-get install python3-pip python3-matplotlib python3-scipy python3-opencv pip install boto3 numpy sudo pip3 install jupyter

-

For ease of use the steps for compiling the model for brainypi have be converted to jupyter notebook, Clone the repository containing the notebook

git clone https://github.com/brainypi/brainypi-aws-sagemaker-example.git

-

Then, Open Jupyter Notebook, by running command

jupyter-notebook

-

The notebook will open in the browser, Navigate to the brainypi-aws-sagemaker-example folder and open

aws-sagemaker-inference.ipynbfile. -

So, Change the

AWS_REGIONvariable in the first cell.Note: If you have previously run the notebook, then you may have to also change these variables

-

role_namein cell 1 -

bucketin cell 3

-

-

Notebook will open, Click on “Cell” and choose “Run All”

-

This will compile the model for BrainyPi, and will run an sample inference program to check if the compilation is success.

-

The compiled model will be downloaded to the folder

brainypi-aws-sagemaker-examplenamedcompiled-detect.tar.gz -

This compiled model can be copied to other brainypi devices and can be re-used without connecting to AWS or without using AWS resources.

Inference compiled model without connecting to AWS

To inference the model we need to write inference code for the model, using the DLR library. As an example, we will be using ready to use inference code for the coco_ssd_mobilenet_v1_1.0_quant_2018_06_29 object detection model written for BrainyPi.

-

Navigate to

brainypi-aws-sagemaker-examplegit repository, -

Check if the folder

dlr_modelexists, If it does not exist in the folder then-

Copy the model file

compiled-detect.tar.gz -

Extract the model

mkdir ./dlr_model tar -xzvf ./compiled-detect.tar.gz --directory ./dlr_model

-

-

Now, run the command

curl https://farm9.staticflickr.com/8463/8132484846_8ce4da18ba_z.jpg --output input_image.jpg python3 object-detection-example.py

This will run the

coco_ssd_mobilenet_v1_1.0_quant_2018_06_29object detection on the input imageinput_image.jpgand show the output. -

See the full code here – https://github.com/brainypi/brainypi-aws-sagemaker-example/blob/main/object-detection-example.py