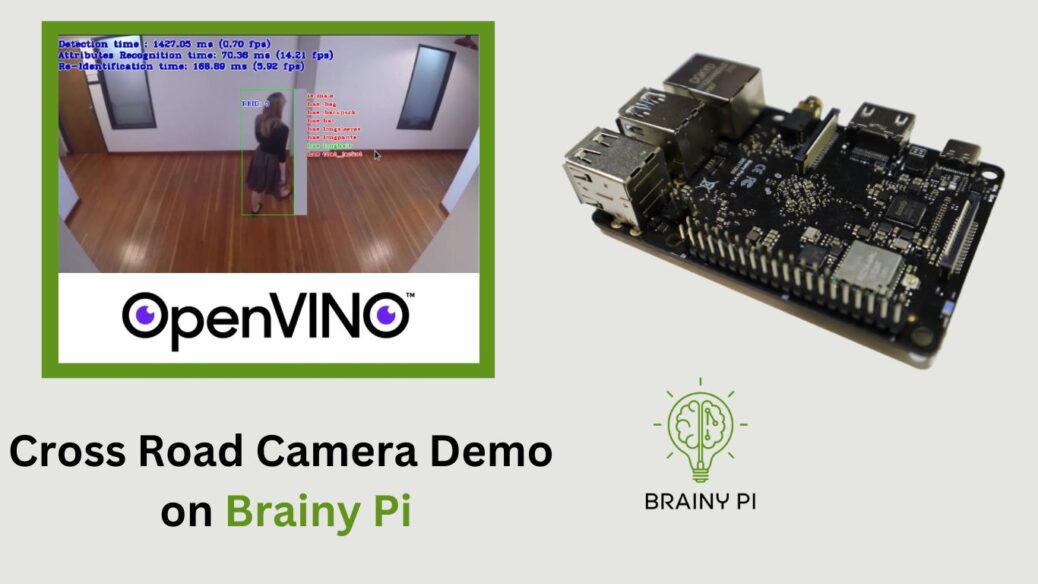

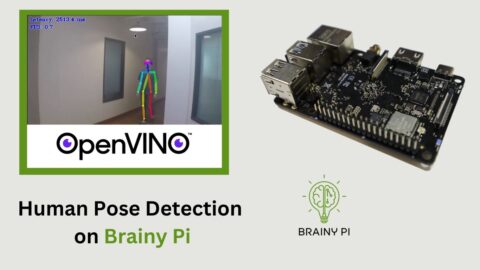

In this blog post, we will explore the AI pipeline for person detection, recognition, and reidentification using OpenVINO on BrainyPi. OpenVINO, short for Open Visual Inference and Neural Network Optimization, is a powerful toolkit by Intel that enables developers to deploy deep learning models efficiently on various hardware platforms. Let’s implement Cross Road Camera Demo on Brainy Pi !

The ability to detect, recognize, and reidentify persons is a crucial component in many computer vision applications, such as surveillance systems, crowd analysis, and personalized marketing. By leveraging OpenVINO’s optimization techniques and the Brainy Pi platform, we can build a robust and efficient solution for person-related tasks.

Installing OpenVINO

To get started, we need to install OpenVINO and its dependencies on BrainyPi. Open a terminal and execute the following command:

sudo apt install openvino-toolkit libopencv-dev

This command will install OpenVINO and the necessary OpenCV development files on your BrainyPi system.

Compiling Demos

Once OpenVINO is installed, we can proceed to compile the demos by Open Model Zoo. These demos serve as excellent starting points for understanding and exploring the capabilities of OpenVINO. Follow the steps below to compile the demos:

Set up the OpenVINO environment by sourcing the

setupvars.shscript:source /opt/openvino/setupvars.sh

Clone the Open Model Zoo repository, which contains the demos, by executing the following command:

git clone --recurse-submodules https://github.com/openvinotoolkit/open_model_zoo.git cd open_model_zoo/demos/Now, build the demos by running the

build_demos.shscript:./build_demos.sh

This process will compile the demo applications and make them ready for execution on BrainyPi.

Running Cross Road Camera Demo

With the demos compiled, we can now download the required models and run them using OpenVINO. Follow these steps to run the demos:

Download the models required for the demo by executing the following command:

omz_downloader --list ~/open_model_zoo/demos/crossroad_camera_demo/cpp/models.lst -o ~/models/ --precision FP16

Download the test video that we will use for demonstration purposes:

cd ~/ wget https://raw.githubusercontent.com/intel-iot-devkit/sample-videos/master/people-detection.mp4Once the models and the test video are downloaded, you can run the object detection demo using the following command:

~/omz_demos_build/aarch64/Release/crossroad_camera_demo -i ~/people-detection.mp4 -m ~/models/intel/person-vehicle-bike-detection-crossroad-0078/FP16/person-vehicle-bike-detection-crossroad-0078.xml -m_pa ~/models/intel/person-attributes-recognition-crossroad-0230/FP16/person-attributes-recognition-crossroad-0230.xml -m_reid ~/models/intel/person-reidentification-retail-0287/FP16/person-reidentification-retail-0287.xml