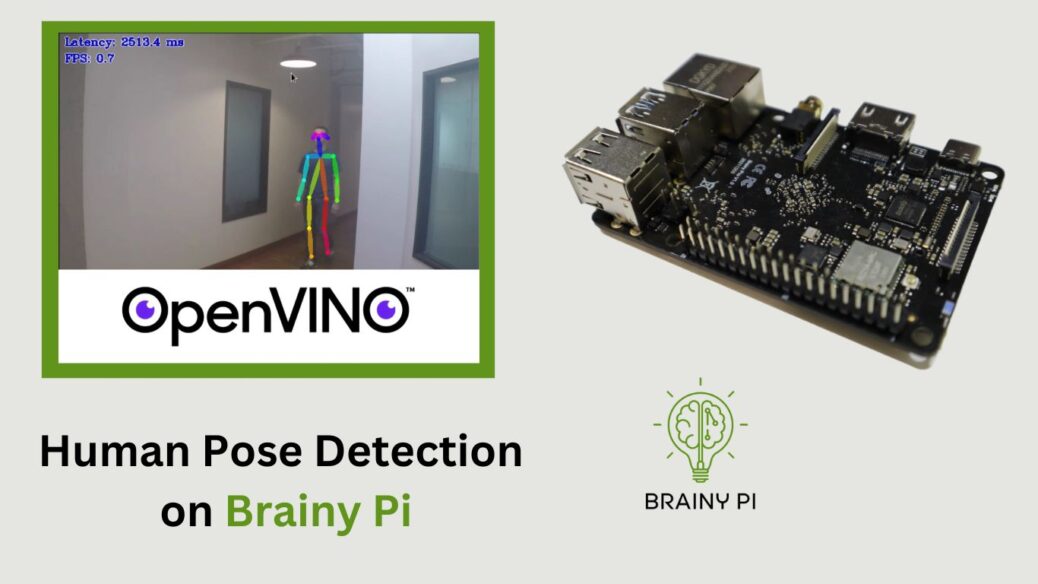

Are you a developer or entrepreneur interested in harnessing the power of AI and computer vision to build innovative products? Well, look no further! In this blog post, we will not only walk you through the process of using OpenVINO on Brainy Pi to predict human poses using AI and computer vision, but also provide valuable insights and tips for your own computer vision projects. This demo not only showcases the remarkable capabilities of OpenVINO but also offers a solid starting point for your exciting journey into the world of computer vision. So, let’s dive right in and implement Human Pose Detection on Brainy Pi together!

Installing OpenVINO

Before we dive into the demo, let’s start by installing OpenVINO and its dependencies on BrainyPi. Follow these simple steps:

Open a terminal on your BrainyPi device and enter the following command to install OpenVINO and the necessary OpenCV development files:

sudo apt install openvino-toolkit libopencv-devThis command will ensure that OpenVINO is installed and ready to use.

Compiling Demos

Now that OpenVINO is installed, we can proceed to compile the demos. These demos serve as valuable resources for understanding and exploring the capabilities of OpenVINO. Here’s how you can compile the demos:

Set up the OpenVINO environment by sourcing the

setupvars.shscript:source /opt/openvino/setupvars.sh

Clone the Open Model Zoo repository, which contains the demos, using the following command:

git clone --recurse-submodules https://github.com/openvinotoolkit/open_model_zoo.git cd open_model_zoo/demos/

Build the demos by executing the

build_demos.shscript:./build_demos.sh

This will compile the demo applications and make them ready for execution.

Running the Demos

With the demos compiled, we can now download the required models and run the human pose detection demo. Follow these steps:

Download the models needed for the demo by running the following command:

omz_downloader --list ~/open_model_zoo/demos/human_pose_estimation_demo/cpp/models.lst -o ~/models/ --precision FP16

This command will download the necessary models for the demo and save them in the

~/models/directory.Download the test video that will be used for the demo:

cd ~/ wget https://raw.githubusercontent.com/intel-iot-devkit/sample-videos/master/face-demographics-walking.mp4This command will download a sample video called

face-demographics-walking.mp4.Once the models and the test video are downloaded, you can run the demo using the following command:

~/omz_demos_build/aarch64/Release/human_pose_estimation_demo -i ~/face-demographics-walking.mp4 -m ~/models/intel/human-pose-estimation-0001/FP16/human-pose-estimation-0001.xml -at openpose

This command will execute the human pose estimation demo using OpenVINO on the test video.