Menu

TFLite examples

Description

TensorFlow Lite (TFLite) is a collection of tools to convert and optimize TensorFlow models to run on edge devices like Brainy Pi. Tflite allows users to create and train custom models therefore creating boundless applications in the realm of ML and AI.

INFO

This documentation is for Rbian OS version: 0.7.2-beta & for TFlite version 2.11.0.

To check the version of Rbian run the command in terminal

os-version

Note: If the command fails or gives error then Rbian version is < 0.7.2-beta.

To check the version of TFlite run the command in python

import tflite_runtime as tflite

print(tflite.__version__)

Note: All the TFlite models that are used require Tensorflow version 2.

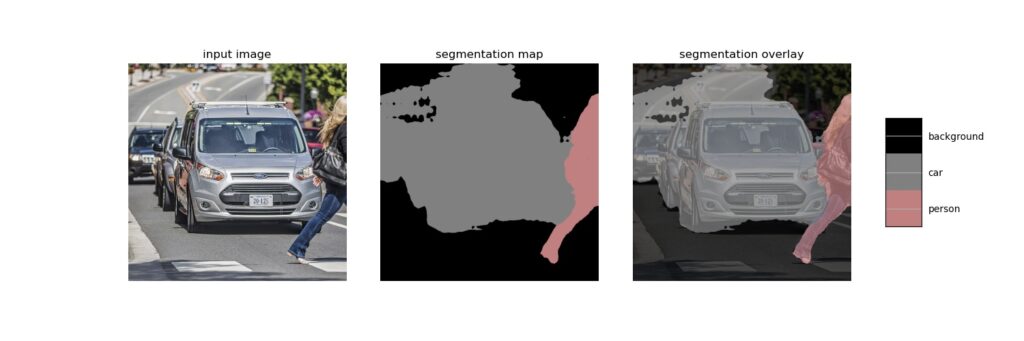

Image Segmentation

Image segmentation in computer vision is a task that separates a digital image into multiple parts.We will be implementing image segmentation application on BrainyPi using DeepLab (mobilenetv2_dm05_coco_voc_trainval) trained on PASCAL VOC dataset.

20 Objects List:

Person: person

Animal: bird, cat, cow, dog, horse, sheep

Vehicle: aeroplane, bicycle, boat, bus, car, motorbike, train

Indoor: bottle, chair, dining table, potted plant, sofa, tv/monitor

Prerequisites

Install TFlite from the previously mentioned steps.

Clone the repository

git clone https://github.com/brainypi/BrainyPi-AI-Examples.git

cd BrainyPi-AI-Examples/TFLite/ImageSegmentation

Run Image segmentation example

python3 imageSegmentation.py

Input

Parameter1: –image_dir: Image file location. (default=’images/car.jpg’)

Parameter2: –save_dir: Directory path to save the result image. (default=’results/result.jpg’)

Output

Shows the segmentation and overlay with class labels in the output image.

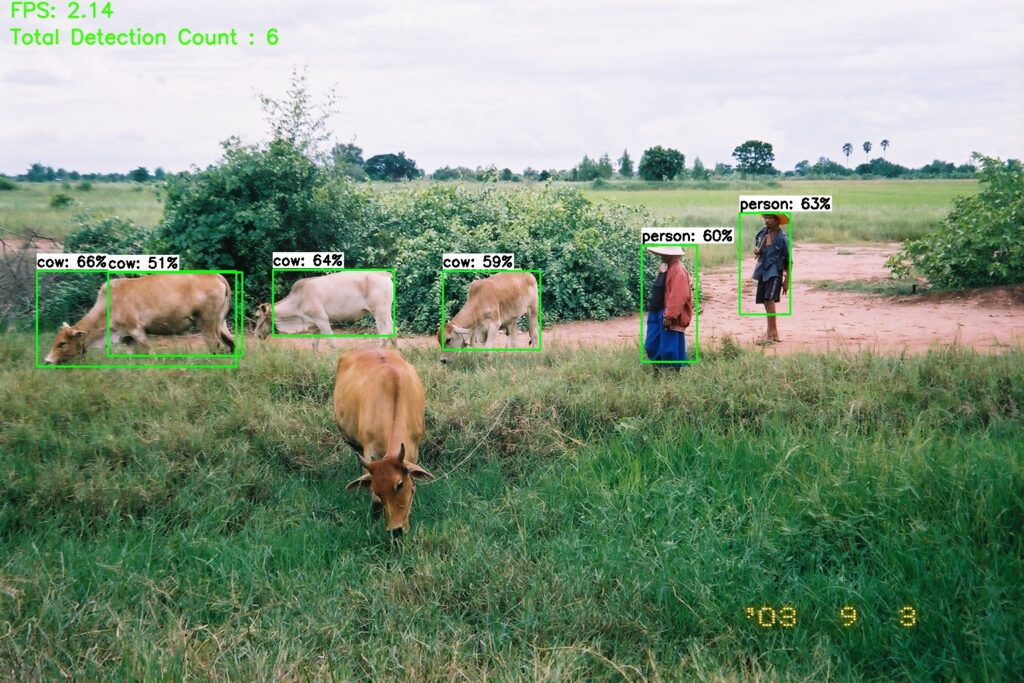

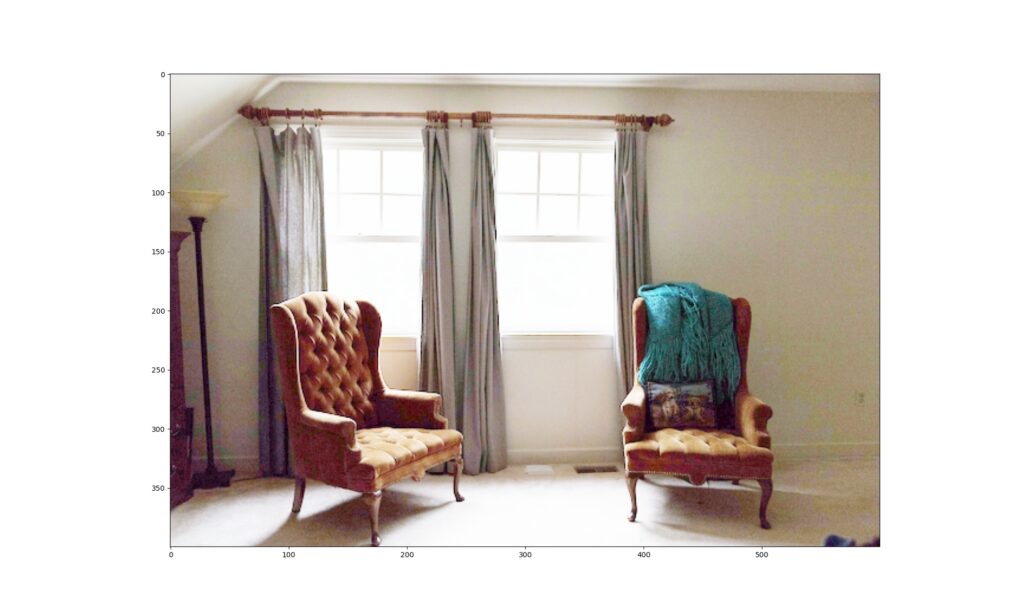

Object Detection

Object detection is a computer vision technique for locating instances of objects in images or videos. We will be implementing object detection application on BrainyPi using starter model trained on COCO 2017 dataset.

91 Objects List

person, bicycle, car, motorcycle, airplane, bus, train, truck, boat, traffic light,

fire hydrant, street sign, stop sign, parking meter, bench, bird, cat, dog, horse,

sheep, cow, elephant, bear, zebra, giraffe, hat, backpack, umbrella, shoe, eye glasses,

handbag, tie, suitcase, frisbee, skis, snowboard, sports ball, kite, baseball bat, baseball glove,

skateboard, surfboard, tennis racket, bottle, plate, wine glass, cup, fork, knife, spoon, bowl, banana,

apple, sandwich, orange, broccoli, carrot, hot dog, pizza, donut, cake, chair, couch, potted plant, bed,

mirror, dining table, window, desk, toilet, door, tv, laptop, mouse, remote, keyboard, cell phone,

microwave, oven, toaster, sink, refrigerator, blender, book, clock, vase, scissors, teddy bear, hair drier,

toothbrush, hair brush

Prerequisites

Install TFlite from the previously mentioned steps.

Clone the repository

git clone https://github.com/brainypi/BrainyPi-AI-Examples.git

cd BrainyPi-AI-Examples/TFLite/ObjectDetection

Install prerequisites.sh

bash install-prerequisites.sh

Run Object Detection example

python3 objectDetection.py

Input

Parameter 1: ‘–model’, help=’Provide the path to the TFLite file, default is models/model.tflite’ (default=’models/model.tflite’)

Parameter 2: ‘–labels’, help=’Provide the path to the Labels, default is models/labels.txt’ (default=’models/labels.txt’)

Parameter 3: ‘–image_dir’, help=’Name of the single image to perform detection on’ (default=’images/test1.jpg’)

Parameter 4: ‘–threshold’, help=’Minimum confidence threshold for displaying detected objects’ (default=0.5)

Parameter 5: ‘–save_dir’, help=’Directory path to save the image’ (default=’results/result.jpg’)

Output

Shows predicted object bounding boxes with confidence scores.

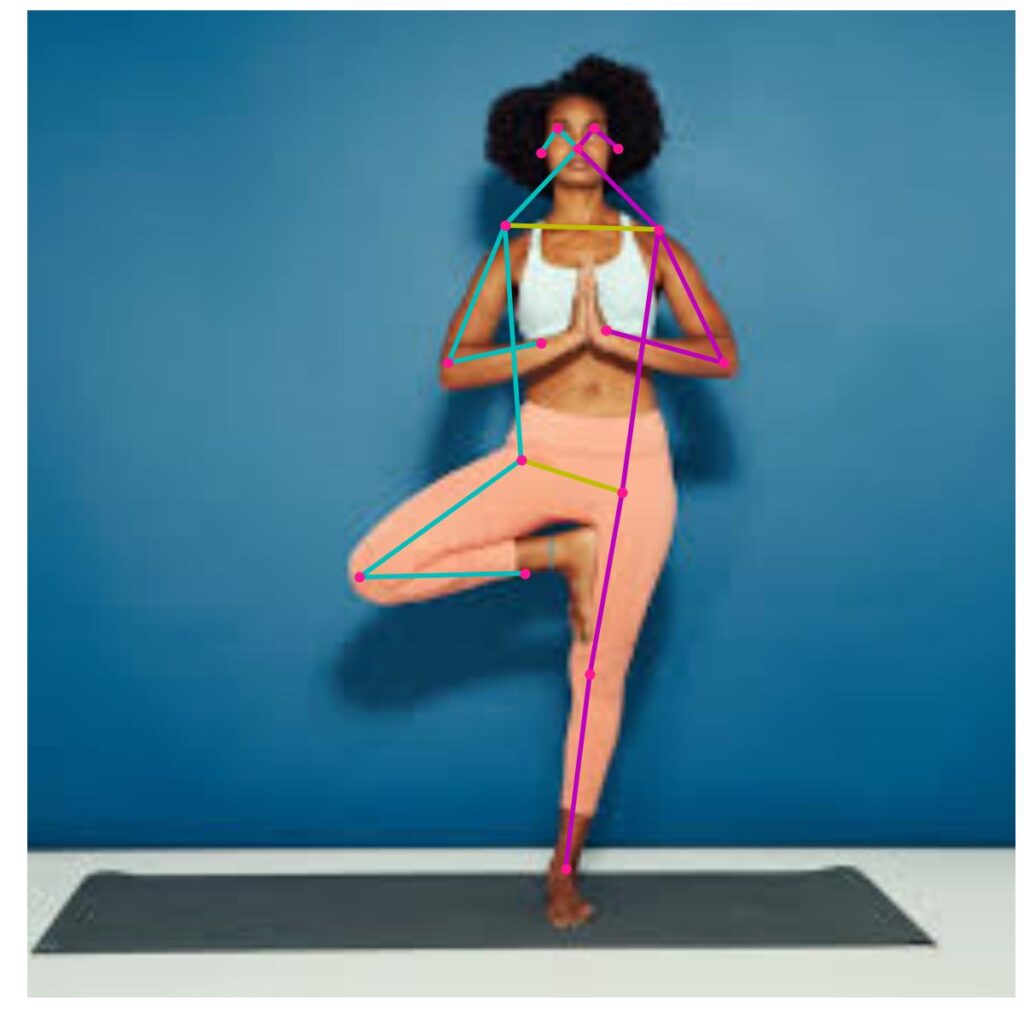

Pose Estimation

Pose estimation is a computer vision technique that predicts and tracks the location of a person or object. We will be implementing pose estimation application on BrainyPi using MoveNet (movenet/singlepose/lightning/tflite/int8).

Prerequisites

Install TFlite from the previously mentioned steps.

Clone the repository

git clone https://github.com/brainypi/BrainyPi-AI-Examples.git

cd BrainyPi-AI-Examples/TFLite/PoseEstimation

Run Pose Estimation example

python3 poseEstimation.py

Input

Parameter1: –image_dir: Image file location. (default=’images/pose1.jpeg’)

Parameter2: –save_dir: Directory path to save the result image. (default=’results/result.jpg’)

Output

Marks the keypoints on the image (The model is trained on images with a single person only)

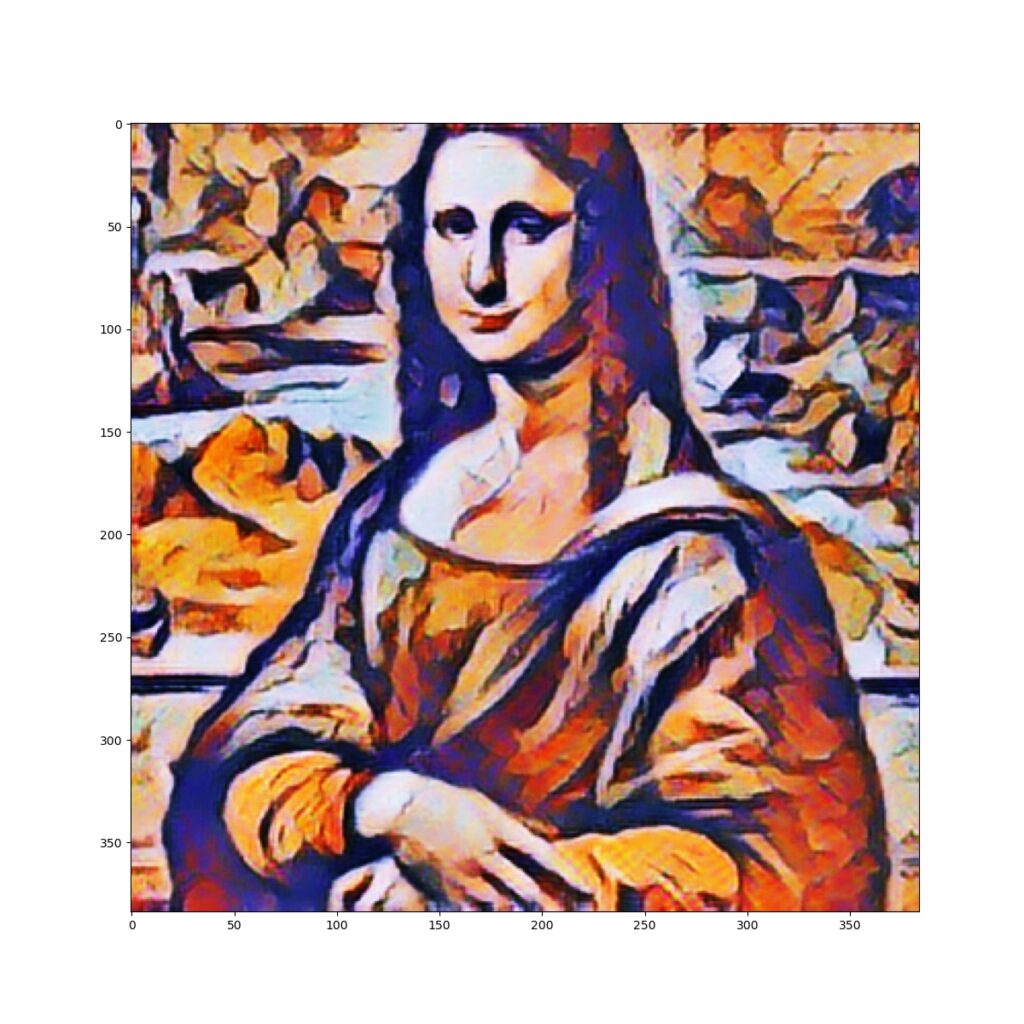

Style Transfer

Neural style transfer refers to a optimization technique that manipulate digital images to adopt the appearance or visual style of another image. We will be implementing style transfer application on BrainyPi using magenta/arbitrary-image-stylization-v1-256 from tensorflow hub.

Prerequisites

Install TFlite from the previously mentioned steps.

Clone the repository

git clone https://github.com/brainypi/BrainyPi-AI-Examples.git

cd BrainyPi-AI-Examples/TFLite/StyleTransfer

Run Style Transfer example

python3 styleTransfer.py

Input

Parameter1: –style_image_dir: style Image file location. (default=’images/style.jpg’)

Parameter2: –content_image_dir: content Image file location. (default=’images/content.jpg’)

Parameter3: –save_dir: Directory path to save the result image. (default=’results/result.jpeg’)

Output

Shows the Stylized image as a combination of content and style images.

Super Resolution

Super-resolution imaging is a class of techniques that enhance the resolution of an imaging system. We will be implementing Low light image enhancement application on BrainyPi using zero-dce model.

Prerequisites

Install TFlite from the previously mentioned steps.

Clone the repository

git clone https://github.com/brainypi/BrainyPi-AI-Examples.git

cd BrainyPi-AI-Examples/TFLite/SuperResolution

Run Super Resolution example

python3 superResolution.py

Input

Parameter1: –image_dir: Image file location. (default=’images/low.jpg’)

Parameter2: –save_dir: Directory path to save the result image. (default=’results/result.jpg’)

Output

Saves an enhanced image in the specified directory.

NEED SUPPORT?

First, Ensure version of OS installed and the version this document is intended for match. If they match and yet problem persists. Please use this Forum link for community help.

If you are an enterprise customer please use the ticketing system login provided to you for priority support.

Previous

<< Datasheets

Next

GPIO Examples >>